Programming in Virtal Reality: An Interview With The Creator of RiftSketch

Programming in Virtual Reality: An Interview with the Creator of RiftSketch

One week ago, I tried on a Samsung Gear VR. Five minutes into playing Gunjack, I knew that the future had arrived. When I got back home that night I realized how odd my multi-monitor setup was. If a consumer level smartphone could run an immersive video game experience, what could more advanced machines do for productivity? I had spent nearly $200 dollars on a new display that could have instead been spawned in a VR environment.

I sought out the answer by trying to find a virtual programming interface. Does Sublime Text have a plugin to code in VR? Does the Oculus Store have any text editors?

Unfortunately there weren’t any text editors to code in VR, nor does Sublime Text have any VR packages. What I did find was a few adventurous people building the future of programming. Enter Brian Peiris.

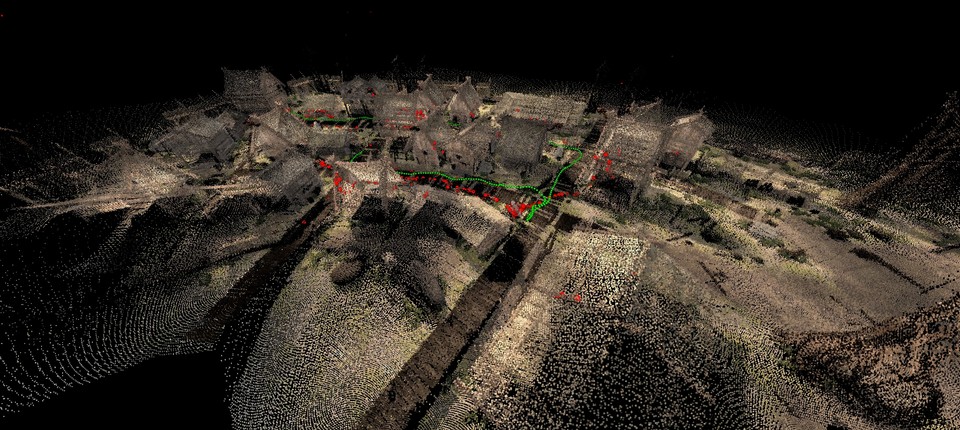

In October of 2014, Brian released a video of RiftSketch: a programming interface for that uses the Three.js library to render real-time models in virtual reality. Not only can you fully code in VR, but you could simply look to your left and see 3d objects rendering live beside the text input window. I knew as soon as I saw the video that this was the future of productivity. While Brian’s year old demonstration on a developer version of the Oculus Rift just a proof of concept, it marked the beginning of all future development environments.

I was lucky enough to be able to interview Brian this past week and get a sense of the current state of RiftSketch, and his thoughts on the future of virtual reality programming. Here is that interview.

Q: Is RiftSketch the future of interfaces or simply a cool demo?

RiftSketch is meant to be a toy. It doesn’t do anything useful yet, I’ve just been playing around with VR to do some cool stuff. I didn’t have the intention of making a full IDE (integrated development environment).

As far as the future of virtual reality development, I’d like to see more stuff done in the realm of productivity applications. In the future – maybe 2 or 3 years from now – I’d like to be able to use a desktop VR interface. It would afford a lot of benefit in terms of productivity in terms of having that virtual space to work with.

Q: What was the most challenging part about creating RiftSketch's interface?

Obviously RiftSketch is pretty simple. I went through a few technical limitations of the platform. Once I got past those hurdles, I wanted to just make a plain text editor. One of the first lessons I learned was that, despite VR being only 2 years old, we’ve already learned a lot of things you don’t want to do. One of the first things is that you almost never want to create the typical video game HUD that’s locked to your head. That’s the first lesson. It breaks immersion, it forces the user to always have a near field of view. I avoided that right off the bat.

The editor I created was part of the world rather than a direct GUI (graphical user interface) for the user. I started with a 2d plane and slapped a text editor on it. The text editor is super simple, it’s just a plain text editor, no code highlighting or anything.

One other problem is not being able to see your keyboard. The latest version of RiftSketch is one that allows you to use a LeapMotion camera to see your keyboard. You can also grab the text editor and move it around in 3d. It feels like you’re moving papers around your desk. It feels like you’re playing around your own personal scene. If you spawn a cube on your scene, and it gets in the way, you can just move your text editor out of the way. It’s a very natural feeling.

Despite my solutions, input is something I almost avoided in RiftSketch. Not only do you need to be a Javascript programmer, but you also have to absolutely be a touch typer to use RiftSketch. It’s totally keyboard oriented. No mouse at all. That was my personal preference, but that isn’t suitable for the average user who isn’t a touch typist or needs to use their mouse frequently. Text entry in VR is a hard one to solve. One of the solutions I came up with was feeding a webcam image into my VR environment and that allowed me to see my hands while I type. That gives you another obstacle. If you move your head, you don’t see your hands anymore. Plus you need another piece of hardware (webcam).

I don’t see a way around touch typing for now. I don’t want another input device for typing, I’m just so used to my keyboard. There are very few ways to getting around using all 10 of your fingers.

A handheld chorded keyboard.

I still want a keyboard, but I’m experimenting with a handheld chorded keyboard. You hold mulitple keys in different combinations to type different keys. That’s something I’m experimenting with. That would allow me to continue coding while allowing me to walk around the 3d environment that I’m building.

Q: Do you think interfaces will be different for every person or will we drift towards a unified interface paradigm?

I think we’re going to converge on a set of principles and they’re going to be different for each application. For gaming you don’t need text entry for example. There’s going to be a common set that we converge upon. Six degree of freedom controllers fixed in your hands will be common. That’s a no brainer for me.

As far as UI, it’s going to continue to evolve just like on desktop. There’s going to be some commonalities that become paradigms and they’re going to evolve into different things. It’s hard to say where we’re going to go with that. We have to start from scratch, we have to relearn the things we are used to on desktops, just like with smartphones/touch devices. Eventually the industry will converge onto a certain set of principles that work the best.

Q: Does VR create new opportunities for visual programming languages?

An example of Microsoft's Visual Programming Language.

That’s one of the things people ask all the time when they see RiftSketch. I don’t really like that because once you start making your code a visual abstraction, you start to lose the expressiveness of your code. You’re no longer dealing with the verbs and language of the code, you’re just plugging things together.

When you�’re dealing with a huge program with thousands of nodes, it’s pretty tough. It’s been tried several times in the past, it’s super interesting, but I don’t think it will be implemented any time soon. There are a lot of types of visualizations of data that work well for 2d, but we need to come up with those best practices for VR before we can even think about manipulating code in VR.

Q: Finally, what are the biggest hurdles to overcome for VR development environments to go mainstream?

Right now it’s very hard to see and write the code properly. The resolution of our headsets is too low for now. RiftSketch in the real world would be like a 10 foot screen 5 feet away from you. Each character has to be about a foot tall just to be readable.

Input is also a major obstacle. That and resolution are the two barriers before work environments are useful. Most people think it’s a matter of time for resolutions, but I think it’s a huge technical hurdle on the display end of things. Magic Leap's device, for example, supposedly uses a light field display instead of a typical pixel-based display. We might have to wait for a hardware breakthrough before we have usable VR development environments.

Brian Peiris is currently a developer for AltspaceVR, a social platform in virtual reality. He is the creator of RiftSketch, a programming interface for Three.js in virtual reality and recently co-wrote Virtual Reality in Software Engineering: Affordances, Applications, and Challenges.

You can find Brian on Twitter and GitHub.

Read More About VR Concepts

The Importance of Tracking Analytics in VR

Room Scale VR Explained: The Most Important Concept in VR

VR Industry Use Cases

4 Enterprise Uses for VR in the Automotive Industry

5 Creative Uses of VR in the Medical Industry

How The Fortune 500 Are Using Virtual Reality In Education & Training

Stay Up to Date With the Latest In VR/AR Analytics